For the past few years, the conversation about AI and the future of work has been dominated by a single, anxious question: "Which human skills will survive automation?" This is the wrong question. It's like asking which horse-riding techniques will remain relevant after the invention of the automobile. It's a question that looks in the rearview mirror.

The reality is that AI is not a simple evolution in tooling. It is a fundamental fracture in the traditional distribution of power. It must be understood not as a tool for productivity, but as a foundational "agency multiplier." It gives individuals and small teams the power to achieve outcomes, and to generate risks, that were once the exclusive domain of large corporations and nation-states.

This isn't a gradual shift. It is a sudden, asymmetric redistribution of capability that is already creating deep fractures across our economic, political, and knowledge landscapes. To understand the future, we must stop asking what jobs AI will take, and start examining the new forms of power it is creating.

The Economic Fracture: The Rise of the Micro-Corporation

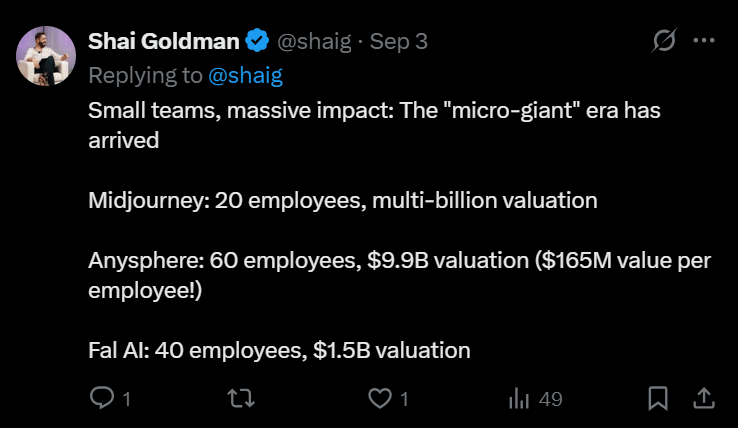

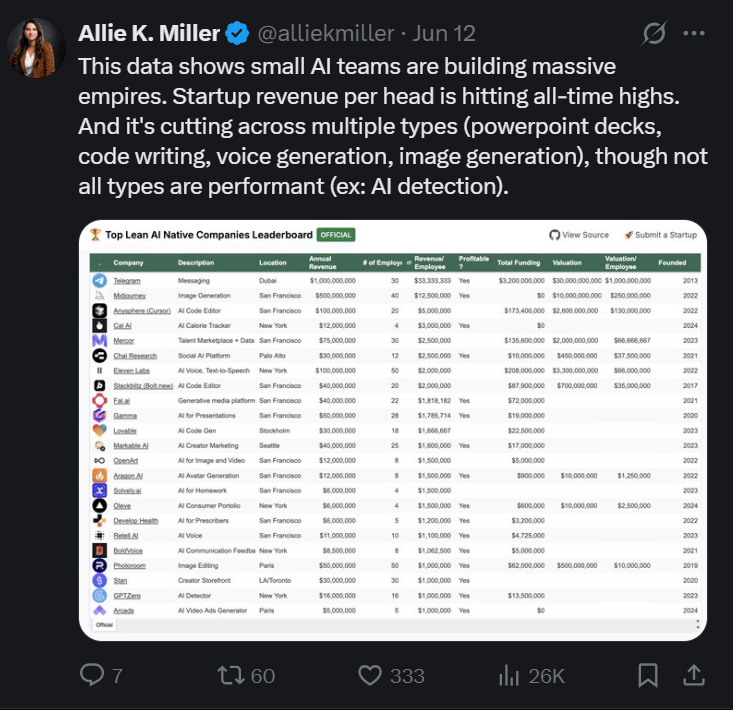

The most visible tremor of this fracture is reshaping the economy. The traditional link between scale, manpower, and value creation has been severed. Consider the generative art platform, Midjourney. In 2023, with just 11 employees, the self-funded company reached an estimated annual recurring revenue (ARR) of $200 million. By 2024, with a team of only 40, it was reportedly approaching $500 million in annual revenue. This translates to an ARR per employee of up to $18.2 million, a figure that shatters the benchmarks of traditional SaaS companies, which typically range from $200k to $500k.

https://x.com/shaig/status/1963283169995542772

This is not merely market disruption; it is a textbook example of what the economist Joseph Schumpeter famously called "creative destruction". This is the process where new innovations demolish old economic structures from within. Here, AI acts as the catalyst, allowing new entrants to replace entire business models not with a slightly better alternative, but with a system operating on entirely different principles of value creation.

But this new power comes with a new class of risk. A 40-person team is now responsible for a global service with hundreds of millions of users, creating immense operational fragility. Simultaneously, this tiny company finds itself in a landmark copyright lawsuit against titans like Disney and Universal. This is a legal risk profile previously reserved for multinational corporations, now shouldered by a micro-team. This dynamic gives rise to what can be called "critical micro-infrastructures." Tools like Midjourney or the code editor Cursor (which reached $100M ARR with 20 engineers) are not simple apps; they become foundational bricks in the workflows of millions. Their failure, due to operational or legal fragility, could have cascading impacts on thousands of dependent businesses, a systemic risk analogous to the interconnection of financial markets.

https://x.com/alliekmiller/status/1933212313785369014

The Political Fracture: The Weaponization of Narrative

If the economic fracture is a tremor, the political fracture attacks the bedrock of our societies: shared reality. Sophisticated information warfare, once the domain of state intelligence agencies, has been democratized.

The 2023 Slovakian election provides a chilling case study. Just two days before the vote, during a legally mandated media blackout, a high-fidelity audio deepfake went viral. In the recording, the leading pro-Western candidate, Michal Šimečka, appeared to discuss rigging the election. Though quickly exposed as a fake, it was too late. Šimečka, who had been leading in the polls, narrowly lost. Analysts and the candidate himself attributed the defeat, at least in part, to the deepfake's impact.

This is a textbook case of asymmetric warfare. The anonymous creator acts as a modern version of the "partisan," a concept explored by theorist Carl Schmitt. This is an irregular combatant who uses the digital terrain not to seize territory, but to hijack the cognitive space of an entire electorate. Their weapon is not a bomb, but a carefully crafted piece of information designed to inflict maximum political damage with minimal resources.

The amplified risk goes beyond a single election. As the public learns that any audio or video can be convincingly faked, we enter the era of the "Liar's Dividend". This phenomenon, documented by institutions like the Brennan Center for Justice, describes a risk deeper than believing the fake: it is the risk of no longer believing the truth. Malicious actors caught in real scandals can plausibly deny real evidence by claiming it's a deepfake, eroding accountability for everyone. This provokes a "collapse of the tiers of evidence". Historically, video was stronger proof than audio, which was stronger than text. Generative AI flattens this hierarchy. A realistic deepfake now has the same initial plausibility as an authentic recording, shifting the burden of proof onto the viewer to disprove every piece of media they encounter, a cognitively overwhelming task.

The Epistemic Fracture: The Automation of Falsehood

This crisis of trust runs deeper still, poisoning the very wells from which we draw our knowledge. The greatest threat to science is no longer just plagiarism, but the industrial-scale manufacturing of "authentic falsehoods".

An investigation into the Global International Journal of Innovative Research (GIJIR) revealed a new kind of academic fraud. The affair was uncovered by Professor Diomidis Spinellis, who discovered an entirely AI-generated article published under his own name without his knowledge. His investigation revealed the practice was systemic: of 53 articles examined, at least 48 were almost entirely generated by AI, with detection scores reaching 100%. The journal was not just publishing fakes; it was falsely attributing them to real, respected researchers, sometimes even to academics who were already deceased.

This is more than just a fake; it is a perfect example of what the philosopher Jean Baudrillard called a simulacrum: a copy without an original. The AI-generated paper is a simulation of science so perfect it can enter the real world's knowledge base, despite being entirely detached from any empirical truth. This enables a sophisticated form of deception called "citation laundering". A malicious actor can use AI to generate a group of fake papers (A, B, C) that cite each other to validate their fabricated conclusions. Then, a more sophisticated paper (D) is produced, citing A, B, and C as its "evidence base." For an unsuspecting researcher, paper D appears legitimate, and its false conclusions are laundered into the authentic body of knowledge. The ultimate systemic risk is "model collapse," a terrifying feedback loop where the next generation of AIs are trained on the fabricated outputs of their predecessors, progressively degrading the reliability of all future models.

The Unseen Battlefield

A tiny team generating the revenue of a mid-sized corporation. An anonymous actor swaying an election with a single audio file. A predatory journal corrupting the global scientific record from the shadows. These are not isolated incidents. They are tremors along the same fault line, the Great Fracture created by AI as a multiplier of human agency.

The power is here. It is in the hands of the small, the fast, and the asymmetric. The critical question is no longer what our machines can do. It is whether our minds, with their paleolithic biases and outdated instincts for risk, are remotely equipped to pilot them. The greatest bottleneck in the AI era is not the technology. It is us.

Romain Peter is a French Philosophy Teacher and PhD student in Philosophy of Mathematics who is fascinated by the transformative potential of AI. He sees AI not just as technology, but as a catalyst for fundamentally new possibilities and ways of thinking.

He seeks to leverages his philosophical background to explore the evolving landscape of AI, focusing on the uncharted territories and profound implications that lie ahead. His academic research focuses on systems and modular structures in mathematical and philosophical theories.